Reinforcement Learning

With Orbit, you can use an RL framework of your choice and focus on algorithmic research. With RSL-RL and RL-Games, you can train a policy at upto 100k FPS, while with stable-baselines3, you can train a policy at upto 10k FPS.

We present ORBIT, a unified and modular framework for robotics and robot learning, powered by NVIDIA Isaac Sim. It offers a modular design to easily and efficiently create robotic environments with photo-realistic scenes, and fast and accurate rigid and soft body simulation.

With ORBIT, we provide a suite of benchmark tasks of varying difficulty- from single-stage cabinet opening and cloth folding to multi-stage tasks such as room reorganization. The tasks include variations in objects' physical properties and placements, material textures, and scene lighting. To support working with diverse observations and actions spaces, we include various fixed-arm and mobile manipulators with different controller implementations and physics-based sensors. ORBIT allows training reinforcement learning policies and collecting large demonstration datasets from hand-crafted or expert solutions in a matter of minutes by leveraging GPU-based parallelization. In summary, we offer fourteen robot articulations, three different physics-based sensors, twenty learning environments, wrappers to four different learning frameworks and interfaces to help connect to a real robot.

With this framework, we aim to support various research areas, including representation learning, reinforcement learning, imitation learning, and motion planning. We hope it helps establish interdisciplinary collaborations between these communities and its modularity makes it easily extensible for more tasks and applications in the future.

With Orbit, you can use an RL framework of your choice and focus on algorithmic research. With RSL-RL and RL-Games, you can train a policy at upto 100k FPS, while with stable-baselines3, you can train a policy at upto 10k FPS.

Orbit includes out-of-the-box support for various peripheral devices such as keyboard, spacemouse and gamepad. You can use these devices to teleoperate the robot and collect demonstrations for behavior cloning.

Sense-Model-Plan-Act (SMPA) decomposes the complex problem of reasoning and control into sub-components. With Orbit, you can define you can define and evaluate your own hand-crafted state machines and motion generators.

It is possible to connect a physical Franka Emika arm to Orbit using ZeroMQ. The computed joint commands from the framework can be sent to the robot and the robot's state can be read back from the robot.

To match complex actuator dynamics (e.g. delays, friction, etc.), you can easily incorporate different actuator models in the simulation through Orbit. This functionality along with various domain randomization tools facilitate training a policy in simulation and transferring it to the real robot. Here we show a policy trained for legged locomotion in simulation and transferred to the robot, ANYmal-D. The robot uses series elastic actuator (SEA) which has non-linear dissipation and hard-to-model delays. Thus, to bridge the sim-to-real gap we use an MLP-based actuator model in simulation to compensate for the actuator dynamics.

Training in Simulation

Trained Policy in Simulation

Deployment on ANYmal-D

Orbit is a general-purpose framework for learning policies for a variety of tasks. Here we show some example tasks that can be easily implemented in Orbit.

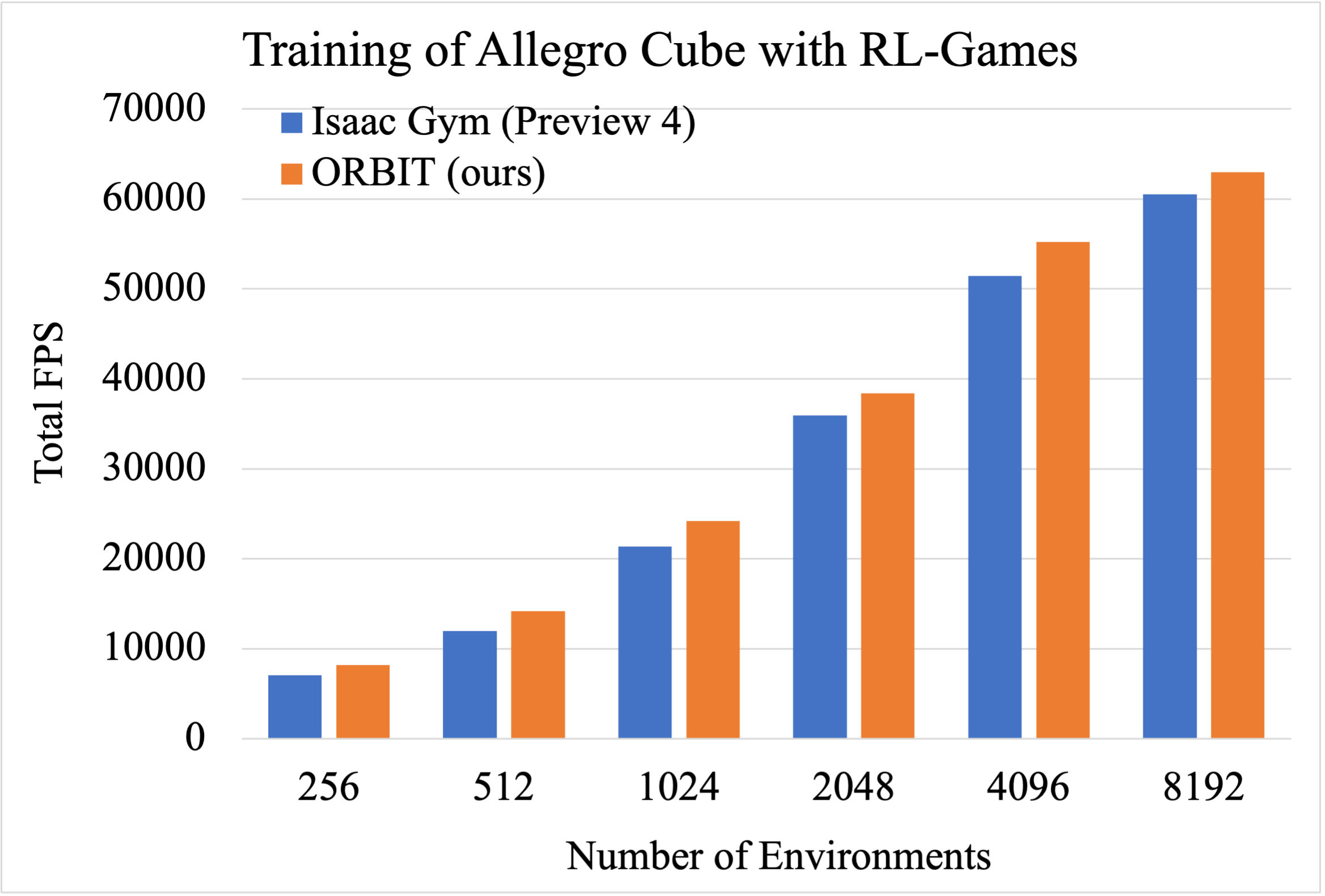

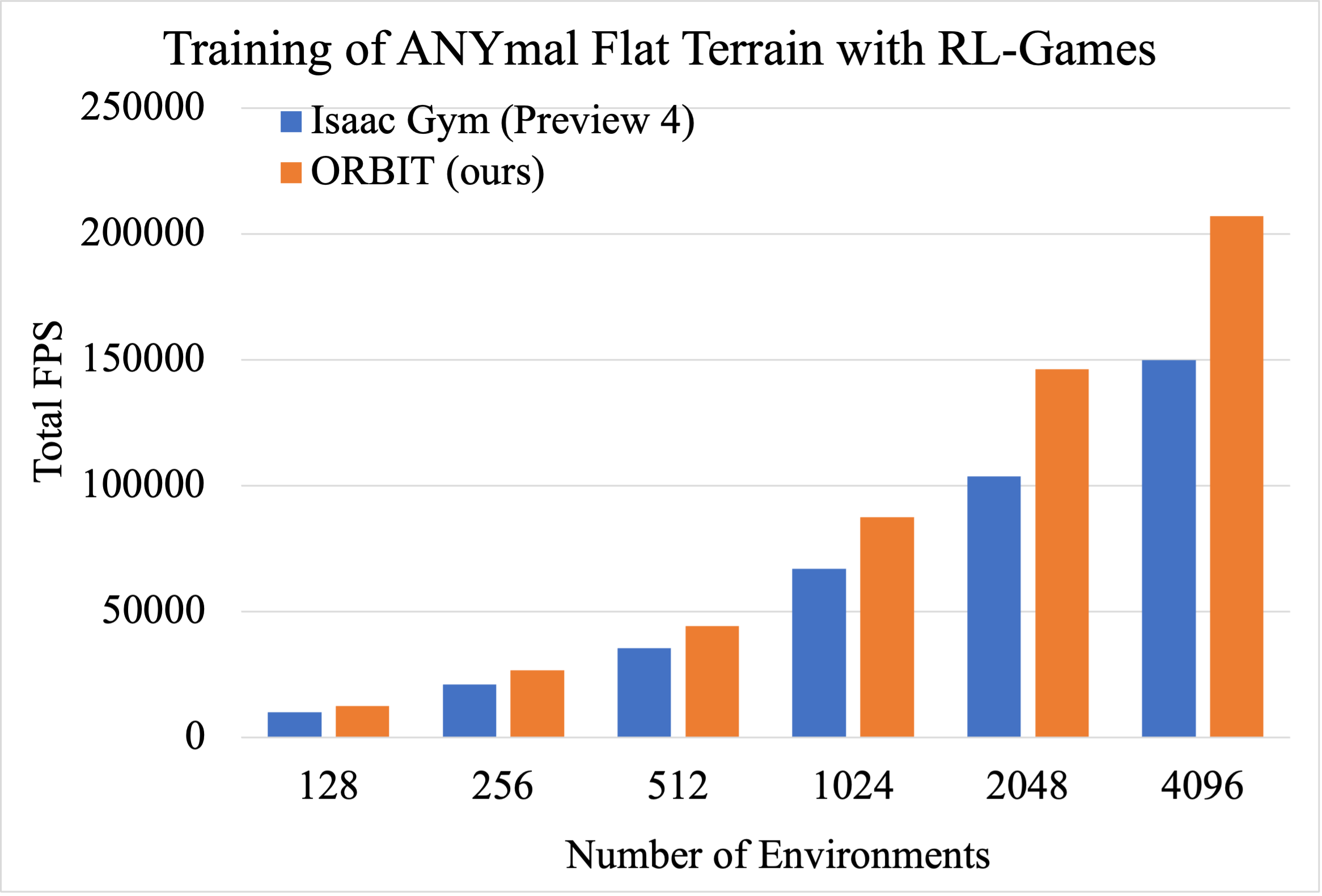

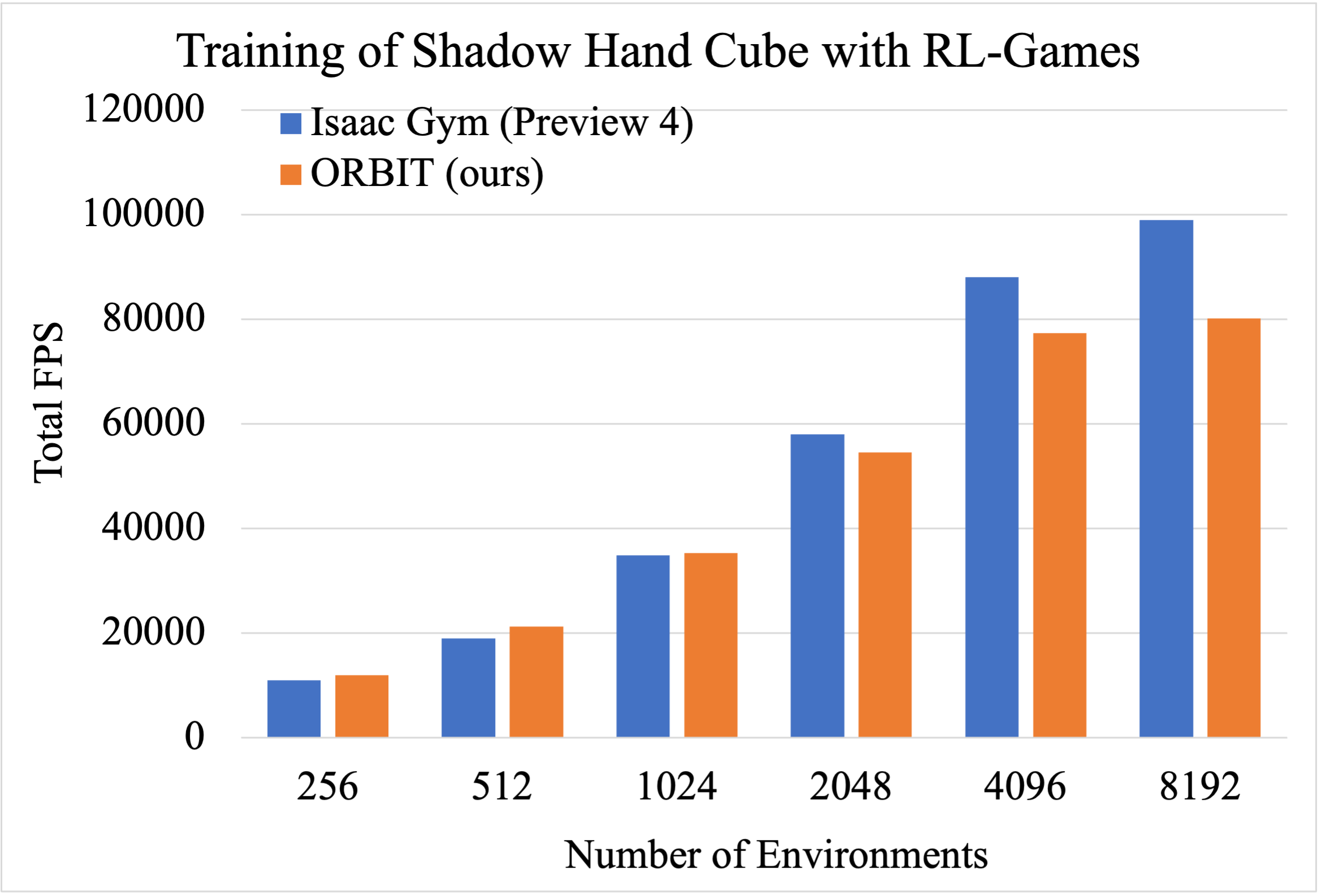

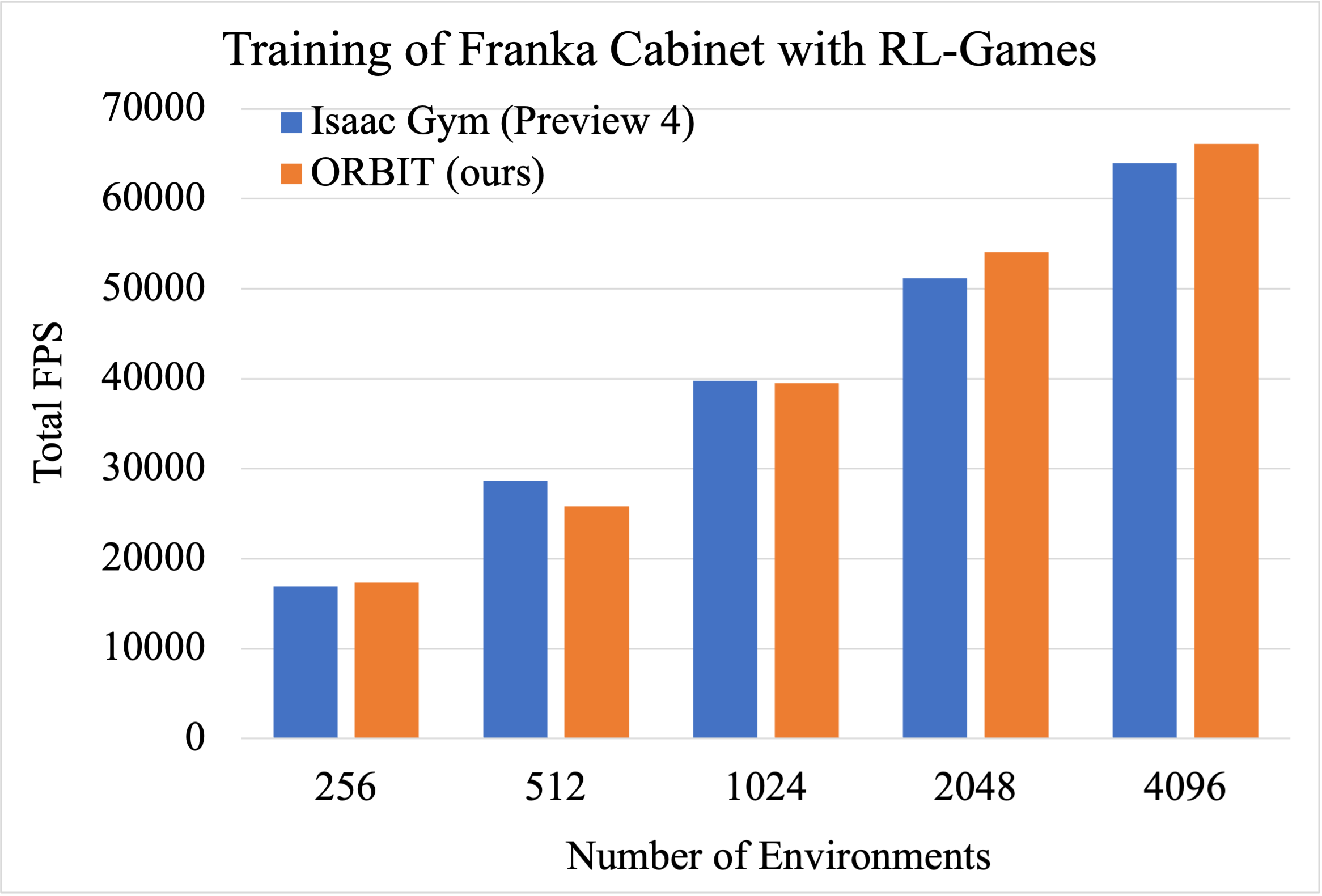

We compare the throughput of environments in Orbit with those in other popular frameworks. We use the same hardware setup for all comparisons, which is a computer with a 16-core AMD Ryzen 5950X, 64 GB RAM, and NVIDIA 3090RTX. We measure the throughput of the environments as the number of frames per second (FPS) that the environment can generate.

* These numbers were computed using Isaac Sim 2022.1.0.

If you use Orbit in your research, please cite our paper:

@article{mittal2023orbit,

title={Orbit: A Unified Simulation Framework for Interactive Robot Learning Environments},

author={Mittal, Mayank and Yu, Calvin and Yu, Qinxi and Liu, Jingzhou and Rudin, Nikita and Hoeller, David and Yuan, Jia Lin and Singh, Ritvik and Guo, Yunrong and Mazhar, Hammad and Mandlekar, Ajay and Babich, Buck and State, Gavriel and Hutter, Marco and Garg, Animesh},

journal={IEEE Robotics and Automation Letters},

year={2023},

volume={8},

number={6},

pages={3740-3747},

doi={10.1109/LRA.2023.3270034}

}